Data Analysis with Spark

Apache’s lightning fast engine for data analysis and machine learning

Apache’s lightning fast engine for data analysis and machine learning

In recent years, there has been a massive shift in the industry towards data-oriented decision making backed by enormously large data sets. This means that we can serve our customers with more relevant, personalized content.

We in the Digital Experience team are tasked with analysing Big Data in order to gather insights and support the product team with the decision making process. This includes finding our customers’ top-rated articles. We can then organize outfits related to those items and help customers make choices in the fashion store. Or we can leverage on similar customer behaviour and suggest an article they might want in future.

Problem

As data is rapidly growing, we need a tool which can clean and train the data fast enough. With large datasets, sometimes it take days to finish the job, which results in some very frustrated data analysts. Let’s have a look at some of the problems:

- Latency while training the data

- Less performance optimization

Why Spark is good for data science?

Focusing on organizing data and analysing it with the help of Spark, first we will try to understand how Spark behaves “under the hood.”

- Simple API’s

- Fault tolerance

Fault tolerance made it possible to analyse large datasets without the fear of failure, such as instances where one node out of 1,000 nodes failed and the whole operation needed to be performed again.

As personalization becomes an ever more important aspect of the Zalando customer journey, we need a tool that enables us to serve the content in approximate real time. Hence, we decided to use Spark as it retains fault tolerance and significantly reduces latency.

Note: Spark keeps all data immutable and in-memory. It achieves this using ideas from functional programming such as fault tolerance, which works by replaying functional transformation over original datasets.

For the sake of comparison, let’s recap the Hadoop way of working:

Hadoop saves intermediate states to disk and communicates over a network. If we consider the logistic regression of a ML ( machine learning) model, then each iteration state is saved back to disk. The process is very slow.

In the case of Spark, it works mostly in-memory and tries to minimize data transportation over a network, as seen below:

Spark is powerful with operations like logistic regression where multiple iterations to train the data are required.

Note:

Spark laziness (on transformation) and eagerness (on action) is how Spark optimises network communication using the programming model. Hence, Spark defines transformations and actions on Resilient Distributed Data (RDD) to support this. Let’s take a look:

Transformations: They are lazy. Their resultant RDD is not immediately computed. e.g map, flatMap.

Actions: They are eager. Their result is immediately computed. e.g collect, take(10).

The execution of filters is deferred until a “take” action is applied. What’s important here is that Spark is not performing a filter on all logs. It will be executed when a “take” action is called and stops as soon as “10 Error log” is fulfilled.

Long story short, we know that latency makes a big difference and wastes a lot of time for data analysts. In-memory computation significantly lowers latency, and Spark is smart enough to optimize on the basics of action.

The figure below shows the hierarchy of Spark functioning. The Spark context is:

Spark is organized in a master/workers topology. In the context of Spark, the driver program is a master node whereas the executor nodes are the workers. Each worker node runs the same task and returns the results to the master node. The resource distribution is handled by a cluster manager.

A Spark programming model is a set of processes running on a cluster.

All these processes are coordinated by a driver program:

- Runs the code that created sparkContext, creates RDDs and sends off transformations and actions.

The processes that run the computation and store data of your application are executors:

- Returns computed data to the driver.

- Provides in memory storage for cached RDD’s.

For Big Data processing, the most common form of data is key-value pairs. In fact, in a 2004 mapReduce research paper the designer states that key-value pairs is a key choice in designing mapReduce. Spark enables us to project down such complex data types to key-value pairs as Pair RDD.

Useful: Pair RDD allows you to act on each key in parallel or regroup data across a network. Moreover, it provides some additional methods such as “groupByKey(), reduceByKey(), join.”

The data is distributed over different nodes and with operations like groupByKey shuffling the data over a network.

We know reshuffling the data over a network is bad. But I’ll explain why the data is reshuffled shortly.

Let’s take an example:

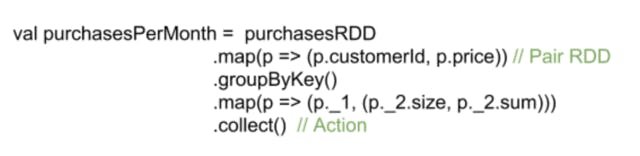

Goal: Calculate how many articles and how much money is spent by each individual over the course of month.

Here, we can see that groupByKey shuffles the data over a network. If it’s not absolutely required we don't send it. We can perform reduceByKey instead of groupByKey and reduce the data flow over a network.

Optimizing with Partitioners

There are few different kinds of partitioner available:

- Hash partitioners

- Range partitioners

Partitioning can bring enormous performance gains, especially in the shuffling phase.

Spark SQL for Structured data

SQL is used for analytics but it's a pain to connect data processing pipelines like Spark or Hadoop to SQL database. Spark SQL not only contains all the advance database optimisation, but also seamlessly intermixes SQL queries with Scala.

Spark SQL is a component to the Spark stack. It has three main goals:

- High performance, achieved by using techniques from the database.

- Supports relation data processing.

- Supports new data sources like JSON.

Summary

In this article, we covered how Spark can be optimized for data analysis and machine learning. We discussed how latency becomes the bottleneck for large datasets, as well as the role of in-memory computation, which enables the data scientist to perform real-time analysis.

The highlights of Spark functionality that make life easier:

- Spark SQL for structured data helps in executing queries either in-memory or persisted on disk.

- Spark ML for classification of data with different models like logistic regression.

- Spark RDD which is a Key-value pair helps in data exploration or analysis.

- Spark pre-optimization with partitioned methodology with less network shuffle.

We believe this will take personalization to a whole new level, thus improving the Zalando user journey.

Discuss Spark in more detail with Nadeem on Twitter.

We're hiring! Do you like working in an ever evolving organization such as Zalando? Consider joining our teams as a Data Engineer!