From Event-Driven Chaos to a Blazingly Fast Serving API

In this post, we explain how we replaced an event-driven system with a high performance API capable of serving millions of requests per second with single-digit-millisecond latency at P99

Real-time data access is critical in e-commerce, ensuring accurate pricing and availability. At Zalando, our event-driven architecture for Price and Stock updates became a bottleneck, introducing delays and scaling challenges.

This post covers how we redesigned our approach and built a blazingly fast API capable of serving millions of requests per second with single-digit-millisecond latency. You'll learn about the caching strategies, low-latency optimizations, and architectural decisions that enabled us to deliver this performance.

The Product Platform with No Read API

In 2016, Zalando built a microservices architecture where independent CRUD APIs onboarded different parts of product data. Once complete, each product was materialised as an event, requiring teams to consume the event stream to serve product data via their own APIs.

In practice, this approach distributed the challenges of API serving across the company. A simple request—"I’m building a new feature and need access to product data. Where do I get it?"—had an unreasonable answer: "Subscribe to our event stream, replay events from the dawn of time, and build your own local store."

Teams with engineering capacity consumed events, modified data to fit their needs, and exposed their own APIs or event streams. Those without the capacity relied on an existing unified data source, such as our Presentation API, inheriting its version of product data. This led to competing sources of truth.

A good analogy is the children's whispering game—product data was altered at each step, and by the end, it no longer resembled the original. With no data lineage, there was no way to trace attributes back to their intended meaning.

The Offer Composition Problem

At Zalando, an Offer represents a merchant selling a Product at a specific price with a certain stock level. To serve the presentation view of a Product Offer, a multi-stage event-driven system merged Product, Price, and Stock events into a single structure. This structure underwent multiple stages of transformation, including aggregation and enrichment, before being stored in the datastore of our Presentation API, which is called by our Fashion Store's GraphQL aggregator.

This architecture made Offer processing slow, expensive, and fragile. Frequent stock and price updates were processed alongside mostly static Product data, with over 90% of each payload unchanged—wasting network, memory, and processing resources. During Cyber Week, stock and price events could be delayed by up to 30 minutes, resulting in a poor customer experience.

The three Product Offer formats (Alpha, Beta, Gamma, in the diagram above) deviated significantly from their base formats. Since other teams could access events from intermediary stages, they developed dependencies on these formats.

The Mission: Decoupling Product and Offer Data

By 2022, it was clear that the Offer composition problem would become a barrier to business growth if left unresolved. A global project, Product Offer Data Split (PODS), was launched to tackle the issue.

The goal was to remove large, unchanged Product data from event streams, eliminating the Offer pipeline bottleneck. A new serving layer would serve Product and Offer data independently or as a combined format for Presentation.

/products/{product-id}- Core Product details/products/{product-id}/offers- Offers our Merchants have available for a Product/product-offers/{product-id}- Combined Product-Offer for our Presentation API

To succeed, our new serving layer— the Product Read API (PRAPI)—needed to match or exceed the performance of the datastore it was replacing.

As the team dug deeper into the problem, one question emerged: Could PRAPI outperform all locally stored copies of Product data?

If so, a simple request—"Where do I get Product data?"—could finally have a simple answer: "Call the Product Read API."

PRAPI Architecture

PRAPI had the following high-level requirements:

- Low-latency retrieval – P99 latency of 50ms for single-item requests and 100ms for batch retrieval

- Resilience to extreme traffic spikes on individual products

- Country-level isolation – Prevent failures from cascading across our EU markets

To meet these requirements, PRAPI was designed with four main components, each an independent Deployment on Kubernetes with tailored scaling rules. Each component incorporates end-to-end non-blocking I/O, leveraging Netty’s EventLoop with Linux-native Epoll transport. DynamoDB ensures high availability and fast lookups when cache misses occur.

Country-Level Isolation / Getting Data In

To achieve a level of country-level isolation, multiple instances of PRAPI are deployed—known as Market Groups—with each serving a subset of our countries. Routing configuration allows us to dynamically shift traffic between Market Groups, allowing us to isolate internal or canary test traffic from high-value country traffic.

Each Market Group's Updaters scale horizontally based on lag, up to the number of partitions in the source stream. To ensure rapid processing of millions of products, each pod:

- Reads batches of 250 products

- Subpartitions events by Product ID

- Issues 10 concurrent batch writes of 25 items to DynamoDB

Scaling to hundreds of concurrent batch writes placed the bottleneck at DynamoDB’s write capacity units, which we could increase to populate a new Market Group in mere minutes if needed.

Outperforming DynamoDB

PRAPI was designed to be a fast-serving caching layer on top of DynamoDB. Here, we leaned heavily on the high-performant Caffeine cache. Using its async loading cache, we configured a 60 second cache time with the final 15 seconds as the stale window. In the last 15 seconds, retrieving a cache entry triggers a background refresh from DynamoDB.

Optimizing Cache Hits

Our customer-driven traffic divides our catalogue into small hot, and large cold sections:

- Cold: Niche items, receive infrequent traffic but must remain highly-available

- Hot: Everyday items, such as white socks and t-shirts are accessed frequently

- Extremely Hot: Limited-edition releases, such as Nike sneakers, generate sudden massive traffic spikes

But, even if just 10% of our 10 million products are hot, caching 1 million large (~1000-line JSON) product payloads per pod is simply not feasible.

To solve this, we leveraged a powerful load balancing algorithm for our products component, Consistent Hash Load Balancing (CHLB).

In CHLB, each backend pod is assigned to multiple random positions on a hash ring. When a request comes in, the product-id is hashed to locate its position on the ring. The nearest pod clockwise on the ring then consistently serves that request. This partitions our catalogue between the available pods, allowing small local caches to effectively cache hot products. The wider we scale, the higher the portion of our catalogue that is cached.

Our batch-component unpacks batch requests, issues concurrent single-item lookups, and aggregates responses. It uses the Power of Two Random Choices algorithm, routing requests to the less-loaded of two randomly selected pods.

Solving the Competing Sources of Truth Problem

Delivering Product data centrally via API solved the Offer composition problem and laid the foundation for future applications. But success hinged on adoption—teams migrating off old formats, standardizing on the new, and decommissioning legacy applications.

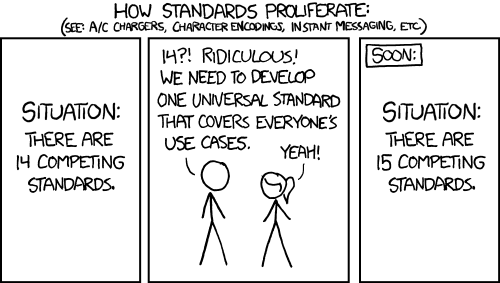

With ~350 engineering teams and thousands of deployed applications, many relying directly or indirectly on Product data, migration was always going to be complex. Without a clear transition path, legacy systems persist, and much like the XKCD comic above, it's easy to end up in a worse state than before.

To ensure adoption, PRAPI took ownership of all legacy representations of Product and Offer data. Engineers meticulously analyzed and replicated existing transformations within PRAPI, allowing client teams to request data in their required format via the Accept header:

application/json— New standard format for all teamsapplication/x.alpha-format+json— Legacy (previously on event stream)application/x.beta-format+json— Legacy (previously on event stream)application/x.gamma-format+json— Legacy (from Presentation API)

Additionally, temporary components within PRAPI emitted alpha and beta formats back onto the legacy event streams. This enabled legacy applications to be decommissioned immediately, while teams gradually migrated off the legacy formats within a fixed sunset period.

Performance Results

To accurately measure PRAPI's performance from a client perspective, we use metrics from our ingress load-balancer, Skipper.

Single GET requests return large (~1000-line JSON) payloads with content-type transformations but still achieve sub-10ms P99 latency.

Batch GET requests, handling up to 100 items, scale predictably with an expected increase in response time, closely aligning with the P999 of single GETs.

PRAPI performs better under load—as traffic increases, more of the product catalogue remains cached, reducing latency. Visible in our cluster-wide latency graphs above, when we load-tested PRAPI.

Advanced Tuning

This section covers the advanced tuning techniques we applied to reduce tail latency in PRAPI.

Java Flight Recorder (JFR) was invaluable in fine-tuning the JVM. By capturing telemetry from underperforming pods and visualising it in JDK Mission Control, we identified Garbage Collection (GC) pauses and ensured no blocking tasks ran on NIO thread pools.

Open-Source Load Balancer Contributions

We contributed the following improvements to the CHLB algorithm in Skipper, our Kubernetes Ingress load balancer:

Minimising Cache Loss During Scaling – Previously, pod rebalancing on scale-up/down caused mass cache invalidations, routing traffic to cold caches. We fixed this by assigning each pod to 100 fixed locations on the ring, reducing cache misses to 1/N, where N is the previous number of pods.

Preventing Overload from Hyped Products – We added the Bounded Load algorithm, capping per-pod traffic at 2× the average. Once exceeded, requests spill over clockwise to the next non-overloaded pod, keeping hyped products cached and distributed.

Eliminating Garbage Collection Pauses

Key learning: The best way to eliminate GC pauses is to avoid object allocation altogether.

- Products – Cache Product data as a single

ByteArrayinstead of anObjectNodegraph, reducing heap pressure. - Product-Sets – Avoid reading individual gzipped responses into memory. Instead, store them in Okio buffers and concatenate them directly in the response object, eliminating unnecessary gunzip/re-gzip operations.

LIFO vs FIFO

Key learning: In latency-sensitive applications, FIFO queuing can create long-tail latency spikes.

- Load Balancer – While we aim to avoid request queuing, switching to LIFO reduced long-tail latency spikes when queuing occurred.

- DynamoDB Clients – We configured a primary DynamoDB client with a 10ms timeout and a fallback client with a 100ms timeout for retries. This prevented FIFO queuing on the primary client during DynamoDB latency spikes.

Scaling Teams Alongside Architecture

The success of the PODS project required more than just technical changes—it also required a reorganization of teams to match the new architecture. Following Conway’s Law and CQRS principles, the Product department was restructured into two stream-aligned teams:

- Partners & Supply – Manages data ingestion (Command side)

- Product Data Serving – Focuses on aggregation and retrieval (Query side)

This shift reduced dependencies, improved scalability, and accelerated product updates. With principal engineers driving architectural simplifications, the new structure ensures resilience for peak events like Cyber Week and lays the foundation for future innovations, including unified product data models and multi-tenant solutions.

What’s Next?

With the core architecture in place, future projects will focus on unified product data models, multi-tenant solutions, and advanced analytics capabilities. The foundation is set—PODS has redefined how Zalando scales product data.

A special thank you to the SPP and POP engineers, as well as all the teams across Zalando who contributed to this large migration effort—it would not have been possible without you.

We're hiring! Do you like working in an ever evolving organization such as Zalando? Consider joining our teams as a Software Engineer!